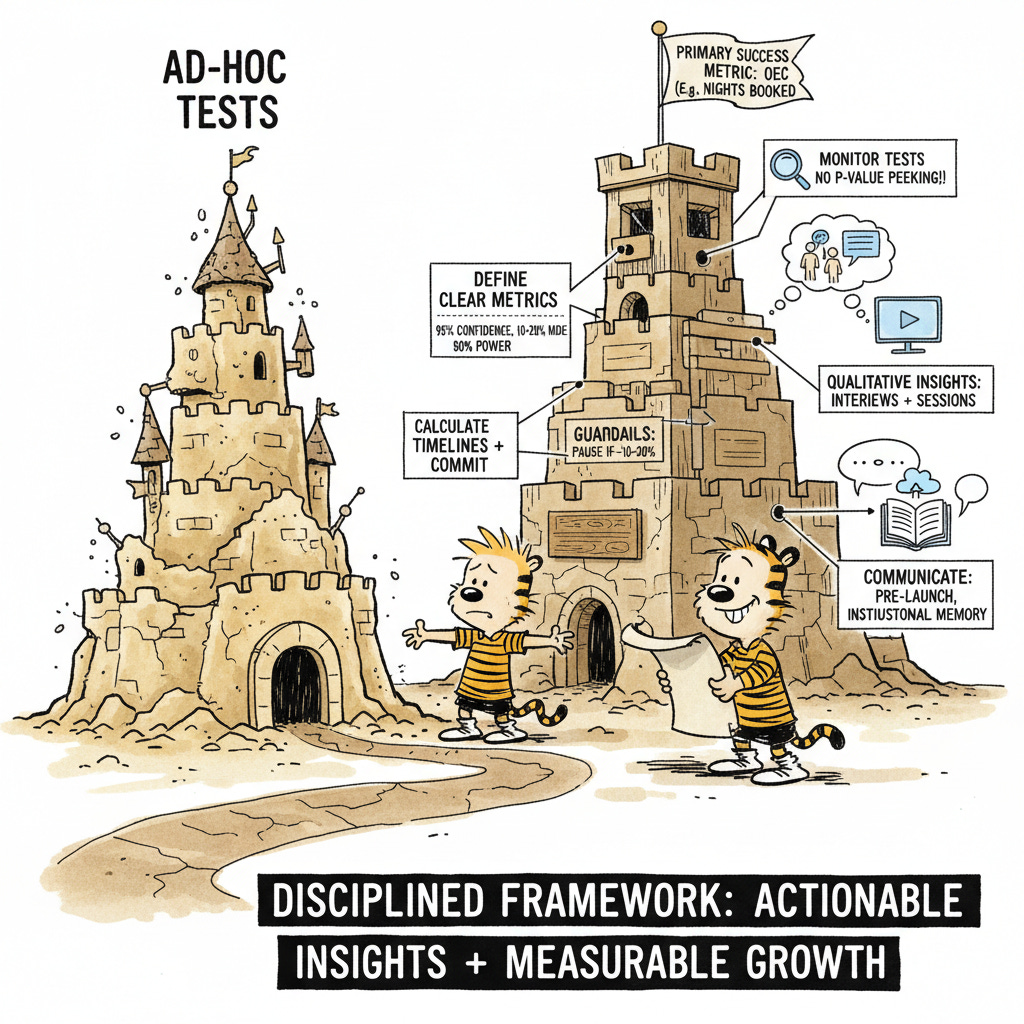

Transform Your Ad-Hoc Tests into a Structured Growth Engine

Let me show you exactly how the best product teams run A/B tests to consistently achieve meaningful, measurable results.

Most A/B testing is broken and you’ve probably felt it. Teams run test after test, chase promising metrics, and still end up with inconclusive results or worse: decisions based on noise rather than signal. The solution isn’t more testing; it’s better testing. Let me show you exactly how the best product teams run A/B tests to consistently achieve meaningful, measurable results.

Start With the Right Foundation

Your testing platform isn’t just another tool in your stack; it’s the backbone of every experiment you’ll run.

You need something that makes test setup straightforward, provides clear and reliable data, and highlights statistical significance without leaving you guessing.

The wrong platform will create friction at every step. The right one becomes invisible, letting you focus on insights rather than infrastructure.

Define Your Metrics Before You Start

Here’s where most teams stumble: they launch tests without clearly defining what success looks like. By the time results come in, they’re left interpreting ambiguous data and debating what actually matters. Set your standards upfront and save yourself the headache.

Start with confidence levels, aim for at least 95% confidence, which translates to a p-value of 0.05. This means you’re accepting only a 5% chance that your results are due to random variation.

Then establish your Minimum Detectable Effect, typically targeting a 10-20% improvement depending on your market dynamics, traffic volume, and the importance of what you’re testing.

Finally, set your statistical power around 80% to ensure your tests can reliably detect real changes when they exist.

Calculate Your Timeline and Commit to It

One of the most common mistakes in A/B testing is leaving experiments open-ended, checking them periodically, and calling them “done” whenever results look favorable. This is a recipe for false positives and wasted effort.

Instead, calculate your timeline based on historical data. At Keep, we’d pull a 90-day average of the action we were testing (say, button clicks), break it down to a weekly average, and target approximately 1,000 conversions per variant.

This gave us a clear endpoint before we even launched.

If a test didn’t achieve statistical significance by that timeline, we documented it clearly, concluded the test, made a decision and moved forward. Sometimes that meant adjusting our approach and planning a retest with a longer timeline or different design.

Choose One North Star Metric

When you track everything, you understand nothing.

Too many metrics create confusion and make it nearly impossible to make clean decisions. The solution is ruthlessly simple: choose one primary metric as your Overall Evaluation Criterion (OEC).

Airbnb does this brilliantly. Their primary OEC is the number of nights booked.

Not clicks, not searches, not wishlist additions. Just nights booked.

This single metric simplifies every experimental decision across the entire company. You can supplement this with two or three secondary metrics for additional context, but your primary metric should be sacred. Everything else is just commentary.

Establish Guardrails Before Launch

The best teams prepare for both success and failure before they launch a single test.

Start by clearly articulating your hypothesis in a simple if-then statement: “If we change X, we expect Y result because of Z reasoning.” This forces clarity and prevents retroactive justification of random results.

Then set up guardrails that will pause your test if something goes wrong. If your core conversion metric drops by 10-20%, you want the test stopped immediately, not discovered days later when you finally check the dashboard. These guardrails protect your business while you’re experimenting. This is also something that I monitor manually when possible.

As testing programs scale, it gets harder to do that, but it’s advisable to automate this process.

Run Your Tests With Discipline

Once your test is live, monitor it carefully but resist the urge to make decisions based on early results. You should be checking your metrics regularly to ensure nothing is going catastrophically wrong, but checking to see if you’re winning is different from checking to make sure you’re not breaking things.

This is the trap of what we call “p-value peeking.” When you see your variant pulling ahead early, it’s incredibly tempting to call it a winner and ship it. But premature analysis based on incomplete data dramatically increases your chance of false positives. Early patterns often don’t hold as more data comes in.

So watch your test, absolutely. Set guardrails that will alert you if key metrics drop significantly. If your core conversion metric declines by 10-20%, pause the test immediately to investigate. You can always restart a test, but you can’t undo real damage to your business.

But don’t make positive decisions early. Set your timeline and trust it. Let the test run its full course before you declare a winner.

While you’re waiting, complement your quantitative data with qualitative feedback. Run user interviews, watch session recordings, gather context that numbers alone can’t provide. When your test concludes and you have results, this qualitative insight helps you understand not just what happened, but why it happened and what the actual user experience was like.

And if your results seem too good to be true, they probably are. Use Twyman’s Law as your gut check: any figure that looks interesting or different is usually wrong. When something seems unrealistic, run a replication test before you roll it out company-wide.

Document Everything

Yes, documentation feels tedious. Yes, it’s time consuming. And yes, it’s absolutely crucial. Record exactly what you tested, which metrics you tracked, what the outcomes were, and what decisions you made based on those outcomes.

This documentation becomes your team’s institutional memory. At Lendio, we maintained a central repository of experiments that anyone in the company could access. New team members could quickly learn from past tests. Product managers could avoid redundant experiments. Executives could understand the empirical foundation behind major product decisions. Without documentation, you’re doomed to repeat the same experiments over and over, learning nothing.

Communicate Constantly

The best testing programs aren’t run in isolation, they’re transparent and collaborative. Establish a clear communication cadence: pre-launch updates, launch announcements, kill updates when you pause tests, and results summaries when experiments conclude.

At Keep, Lendio and Forge we used dedicated Slack channels for experiments, ensuring stakeholders always knew what was running, why we were running it, and what we were learning. This transparency builds trust, prevents surprises, and helps everyone understand that good testing includes both wins and losses.

Structured A/B testing isn’t just another process to follow, it’s your strongest tool for confident decision-making and measurable growth. When you move from ad-hoc experimentation to a disciplined testing framework, you transform uncertainty into actionable insights.

Stop spinning your wheels. Start making real, measurable progress today.